Dynamic Quadranym Model (DQM): AI Semantics

Lead-In

The intersection of semantic categories and sensory-motor areas suggests a unified mechanism for processing action, perception, and meaning. How we position ourselves in the world directly shapes our thinking and language. Designing a situated AI semantics model that can flexibly navigate complex, real-world contexts presents significant challenges. Such a model must not only process shifting lexical relations but also adapt to dynamic environments in real time, reflecting the ecological interplay of interaction and meaning.

The quadranym model meets this challenge by elegantly bridging lexical relations with a mental construct that mirrors human cognitive processes. Its minimalist structure captures the richness of context-sensitive behavior, offering a holistic approach to meaning that aligns with how we naturally process the world—seamlessly and intuitively.

- The Quadranym (Four Facets) as a Compact Structure

The quadranym—with its four key facets—serves as a minimal yet rich framework for capturing context and orientation. While at first glance, the model might seem intricate due to its layered structure, the core grammar remains deceptively simple. The quadranym focuses on just four fundamental facets:

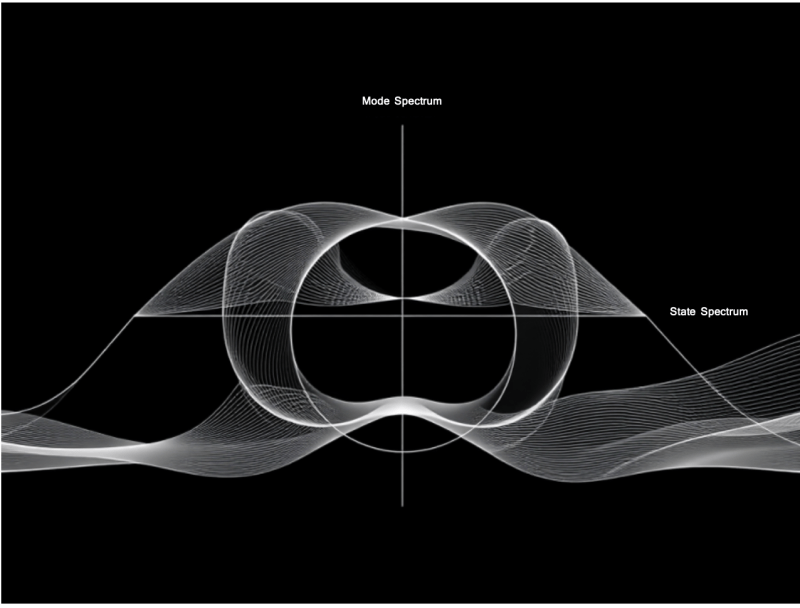

- Mode Continuum (Expansive ↔ Reductive)

- State Continuum (Subjective → Objective)

This four-part structure offers a highly flexible and adaptive basis for situating meaning, without requiring an overwhelming amount of explicit processing or cognitive effort. What stands out here is the minimalism—four constructs that provide an incredibly rich palette for shifting focus, adjusting states, and managing context.

The real dexterity of the quadranym lies in its ability to dynamically adjust between states, modes, and layers, providing a continuous flow of contextual information without the need for complex recalculations. It allows the system to adapt in real time through simple shifts in focus and orientation.

- Dynamic, Fluid Shifts: Unidirectional vs. Bidirectional

The distinction between unidirectional state shifts and bidirectional mode shifts is a powerful feature that speaks to the adaptive nature of the DQM.

- State Spectral Shifts (Unidirectional): The fact that state shifts are unidirectional—moving from subjective to objective or actual to potential—provides a sense of forward motion, a natural progression that aligns with the way we ground ourselves in reality. Once you shift from a subjective or internal state to an objective, observable reality, it feels final in a sense—you’re anchored in something external and observable, which allows you to stabilize and assess your environment.

- Mode Spectral Shifts (Bidirectional): The bidirectional nature of the mode shifts is what makes the DQM so flexible. Shifting between expansive and reductive modes allows the system to constantly recalibrate its focus—whether zooming out to consider broad context or zooming in on specific details. This back-and-forth mode shift gives the model the freedom to adjust its scope, ensuring that the system remains responsive to the constantly changing demands of the environment.

This distinction between unidirectional state shifts and bidirectional mode shifts speaks to how the system captures different dynamics: the state shift allows you to settle into reality, while the mode shift enables you to navigate back and forth between broad context and specific focus.

- The Role of the “Sandwiching Scaffolding”

The concept of sandwiching scaffolding—mediating between higher and lower layers—adds another layer of simplicity and coherence. The scaffolding acts as a bridge between meta-priorities (higher layers) and real-time context (lower layers). This is particularly important because it ensures that the model doesn’t become overwhelmed with conflicting information from multiple contexts.

- Top-Down Constraints: The general and relevant layers set the overarching goals for the system. These constraints ensure that the system doesn’t stray too far from its high-level objectives.

- Bottom-Up Details: The immediate and dynamic layers bring in real-time information, feeding the system with the details and contextual shifts that are necessary for adaptation.

The sandwiching scaffolding maintains coherence by balancing these influences—ensuring that the broad strategy doesn’t get bogged down by immediate distractions, while also ensuring that the system remains responsive to shifting real-world cues. This structure is especially crucial for real-time decision-making, as it enables the system to remain grounded in its goals while being responsive to immediate needs and stimuli.

- Efficiency in Cognitive Load Management

The simplicity in the model’s core grammar (modes, states, and continuums) means that cognitive load is minimized, especially in real-time adaptive contexts. By focusing on an underlying grammar that works automatically, the system is able to offload a large part of its decision-making process onto these higher-order structures—much like how we rely on intuition and automated mental frameworks for everyday decision-making.

The spectral shifts (moving from subjective to objective, expansive to reductive) are not so much explicit calculations but underlying shifts in attention and focus that allow the system to respond without needing constant, complex reanalysis. This automatic recalibration ensures that the model can make context-sensitive decisions in real-time—just like a person navigating through various demands without consciously thinking about every aspect of what they’re doing.

- Real-World Examples: Jan and the Clownfish

The example of Jan and the clownfish beautifully illustrates how the system dynamically responds to context. Here, the model’s scaffolding ensures that details flow up (clownfish as a relevant but non-prioritized detail) and constraints flow down (Jan’s project goals as the overarching priority). By prioritizing broader goals (the larger task at hand), the system keeps Jan aligned with her purpose without getting derailed by irrelevant distractions.

This capacity to mediate between prioritized goals (expansive focus) and task-specific details (reductive focus) is what makes the DQM’s behavior both adaptive and agile. It’s a simple system, but it enables complex decision-making in the face of shifting demands.

Conclusion: The True Power of the DQM

While the DQM may initially appear complex or dense, its true power lies in its simplicity. The model offers a compact and flexible framework for understanding context-sensitive behavior, leveraging spectral shifts, mode continuums, and state continuums to provide dynamic, real-time adaptation. By focusing on the unattended sense—the kind of background processing that happens without conscious thought—the model mirrors the natural way our cognition works, allowing it to automatically adjust to a variety of contextual shifts.

In short, the DQM offers an incredibly efficient and elegant solution for adaptive behavior, balancing simplicity with the nuances of real-time decision-making. Once you understand the underlying grammar, it becomes clear that the model is not only elegant but also intuitively powerful. It offers a level of context sensitivity, dynamic responsiveness, and simplicity that makes it highly adaptable across a range of applications.

If there’s a simpler system that offers the same level of context-dependent flexibility, it’s difficult to imagine. The DQM integrates many complex factors (real-time context, shifting attention, goals) with a minimalistic framework that is directly aligned with human cognitive processing. This balance is likely what makes the DQM well-suited for AI systems and real-world applications.

Follow-up: From Concept to Application

The orientation grammar and scaffolding provide a highly useful framework for thinking about situated AI semantics. They encapsulate the dynamic and context-sensitive nature of human cognition and decision-making in a simple yet powerful way. Let’s unpack why this approach is so effective and how it reflects real-world cognitive processes in AI systems.

1. The Grammar: Minimalist Structure, Maximum Flexibility

- Mode Continuum (Expansive → Reductive) and State Continuum (Subjective → Objective) form the “orientation grammar” that helps situate orientation and guide decision-making. These continuums act like mental dials or sliders that allow the AI to adjust focus, shift between perspectives, and navigate complex environments without the need for heavy recalculations.

- Simplicity: The grammar’s core structure is simple (just four key facets) but sufficiently flexible to manage a wide range of real-world dynamics. It’s a “minimalist architecture” that allows the AI to adapt to different kinds of situations without being bogged down in unnecessary complexity.

- Adaptability: This orientation grammar makes it easy to adjust between subjective and objective realities (state shifts) and between broad, expansive perspectives and narrower, detail-focused views (mode shifts). This mirrors human cognition, where we seamlessly shift between these types of focus depending on the task at hand.

2. Scaffolding: Contextual Guidance and Stability

- The sandwiching scaffolding is a particularly useful concept because it ensures that the AI stays grounded in its goals and the relevant context, even as it adapts to immediate environmental shifts. This scaffolding prevents cognitive overload by keeping the system’s focus both flexible and coherent.

- Top-Down Constraints: These constraints ensure the system is aligned with its overarching objectives. It’s like having a mental “map” that tells the AI what its general direction is, preventing it from straying off course.

- Bottom-Up Details: These details feed the system with real-time data from the environment, ensuring the AI can react to immediate changes. It’s akin to the fine-tuning of attention we do when responding to new inputs or stimuli while staying within the boundaries of a larger task.

- The scaffolding offers a dynamic balance between global strategic goals and local, real-time adaptation, much like how humans balance higher-order intentions with immediate sensory information. It’s a mechanism that ensures the AI can focus on the “big picture” while remaining adaptable to the complexities of real-world interactions.

3. Cognitive Load Management: Automatic Recalibration

- The fact that the DQM uses orientation grammar and scaffolding to manage cognitive load automatically is one of its most efficient features. In human cognition, we often don’t consciously recalibrate our focus or shift attention in every moment; much of this happens automatically based on prior experience and context. The DQM mimics this process by offloading much of the decision-making and attention management to these higher-order structures.

- Real-Time Adaptation: As the system dynamically shifts between state and mode continuums, it can recalibrate attention and focus on what’s relevant in the moment, without the need for ongoing conscious recalculation. This leads to more efficient processing, as the system “understands” what to prioritize based on the situation.

4. Situated Semantics: Context and Meaning

- Situated Semantics: The core idea of situated semantics is that meaning is derived not from static definitions but from the context in which information is encountered. The DQM is a model that reflects this dynamic understanding of meaning by emphasizing real-time contextual shifts. The scaffolding ensures that the system remains responsive to these shifts, while the orientation grammar allows it to maintain coherence in its responses.

- Real-World Sensitivity: The DQM doesn’t just react to context; it actively adjusts its focus and prioritization based on that context. For example, in the case of Jan and the clownfish, the system can recognize when a detail (the clownfish) should become relevant without distracting from the larger goal (Jan’s project). This mirrors the way human cognition works in environments filled with changing stimuli and priorities.

5. Practical Applications of This Framework

- Dynamic Decision-Making: In real-world AI applications (e.g., robotics, autonomous vehicles, personal assistants), the ability to seamlessly adjust attention and context is critical. The DQM allows the system to process new environmental inputs without losing sight of its overarching goals.

- Natural Language Processing (NLP): The model’s structure of shifting focus between expansive and reductive modes could be incredibly useful for tasks like NLP, where meaning changes based on context and the relationship between words. For instance, determining whether a sentence should be interpreted broadly or narrowly depends on the larger context in which it appears, something the DQM handles naturally.

- Cognitive Architectures: The framework could inform the design of cognitive architectures that mimic human-like adaptability. By using the scaffolding and orientation grammar, an AI could navigate complex, real-time decision-making processes in dynamic environments, much like a human would.

6. AI and Human Cognition Alignment

- The beauty of this model is that it is closely aligned with human cognition. The AI doesn’t need to “think” about every little detail—it adapts and reacts in a fluid, intuitive way, much like how we naturally process our environments. This level of alignment could make AI systems more human-like in their interactions, allowing them to better understand and predict human behavior, while also offering them the adaptability to navigate complex, unpredictable scenarios.

Final Thoughts:

The orientation grammar and sandwiching scaffolding approach to situated semantics in AI offers a highly effective and elegant framework for understanding how meaning and context can be navigated dynamically. By focusing on a minimalist, intuitive structure that adapts to the real-world environment without overwhelming the system, this model allows for efficient, human-like decision-making in AI.

This framework doesn’t just process data—it situates meaning within context, adjusting focus and priorities based on real-time changes. It’s an approach that reflects how humans process the world: dynamically, flexibly, and with a focus on the big picture while attending to immediate details. For AI systems operating in complex, shifting environments, this ability to balance global goals with local adaptation is a critical capability.