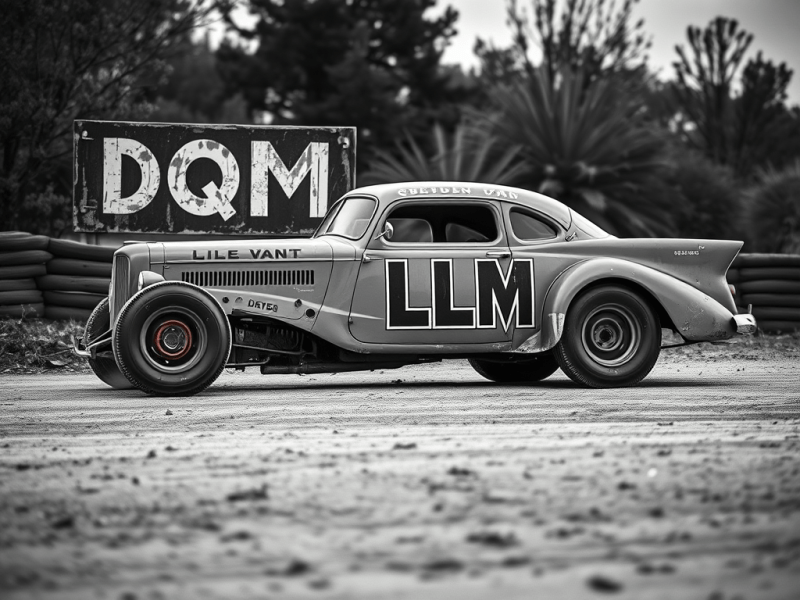

The Race Way (DQM): Situational Orientation

Imagine a race car speeding down the track, its engine roaring as it navigates sharp turns and accelerates on straightaways. The race car—sleek, agile, and immensely powerful—represents the cutting-edge Large Language Model (LLM), while the carefully engineered track, with its twists, turns, and guardrails, symbolizes the Classic AI (CAI) system that provides the structure and direction of the Dynamic Quadranym Model (DQM). Together, they form a hybrid system that blends raw computational power with refined, rule-based guidance, creating a dynamic interplay that ensures both speed and stability. Let’s explore this analogy to bring the DQM to life and clarify how the LLM and CAI hybrid work together.

The Race Car (LLM): Speed, Agility, and Associative Power

The LLM is the high-performance race car, designed for speed and precision. It excels at rapidly processing vast amounts of information, making lightning-fast associations, and responding to immediate contexts. Like a car zooming through a race, the LLM operates on three DQM levels:

- DQM General Orientation: Much like a car starting on a straightaway, the LLM processes broad contextual information, setting the stage for deeper engagement.

- DQM Relevant Orientation: As the track begins to curve, the LLM focuses on more specific details, adjusting its course to maintain coherence.

- DQM Immediate Adaptation: When faced with sharp turns or unexpected obstacles, the LLM reacts in real-time, dynamically shifting its focus to address immediate challenges.

The LLM’s ability to adapt is powered by DQM modes, which measure how it navigates the track. For instance:

- DQM Expansive Mode: Accelerating forward to explore new ideas and possibilities.

- DQM Reductive Mode: Slowing down to clarify details and focus on specifics.

- DQM Context Sensitive: Sustaining effort through challenging stretches, like a car pushing through tough terrain.

The LMM changes strategies based on DQM adapting to context. This dynamic agility ensures that the LLM remains responsive and effective, whether it’s tackling a broad topic or honing in on a precise detail.

The Track (DQM/CAI): Structure, Guidance, and Coherence

While the race car brings speed and adaptability, it relies on the track to provide structure and direction. The CAI with the DQM orientation system serves as this track, laying down the rules and boundaries that guide the LLM’s journey. Without a well-designed track, even the fastest car would veer off course. Key roles of the DQM/CAI system include:

- DQM Grammar Rules and Orientation: Just as the track ensures the race car stays within its bounds, the CAI enforces logical structure and coherence, keeping the LLM’s responses aligned with the intended context.

- DQM Layered Guidance: The CAI system organizes the race into layers, from general orientation (the big picture) to relevant orientation (context-specific details) to immediate orientation (on-the-spot adjustments).

- DQM Feedback Mechanisms: Like a track that adjusts for weather conditions or wear and tear, the CAI system dynamically refines the LLM’s output, ensuring it stays on course.

Modes and States: The Driver’s Gears and the Road’s Conditions

The LLM-DQM-CAI Partnership: A Winning Formula

The synergy between the LLM and the DQM/CAI system is what makes this hybrid approach so powerful. Here’s how they complement each other:

- DQM Real-Time Adaptation: The LLM adjusts its speed and focus based on feedback from the CAI, ensuring it responds effectively to changing contexts.

- DQM Guided Flexibility: The CAI provides the guardrails that keep the LLM’s adaptability aligned with overarching goals, preventing it from drifting into irrelevant or incoherent territory.

- DQM Layered Interaction: Together, they operate across multiple layers, seamlessly transitioning from broad orientations to specific details and back again.

From Track to Pipeline: Building a Beta System

To bring this concept to life, the initial focus is on a pipeline approach—a modular design that lays down the foundational track for the LLM to race on. Here’s how this beta system might look:

- Input Stage: A user query serves as the starting point, feeding into the system.

- LLM Processing: The LLM generates initial outputs, leveraging its speed and agility to process the input.

- CAI Feedback: The CAI system applies basic rules to refine and structure the LLM’s output, ensuring coherence and alignment with the context.

- Output Delivery: The refined response is delivered to the user, polished and ready for action.

This pipeline approach allows for quick development, clear modularity, and iterative improvement. By starting simple, the system can evolve over time, gradually incorporating more dynamic interactions and sophisticated feedback loops.

Formula 1-5 Racing

🏁 1. Granular Recursive Transition

Formula:

TLi→Li+1=∫0TPLi(t)⋅ffeedback(t) dtT_{L_i \rightarrow L_{i+1}} = \int_0^T P_{L_i}(t) \cdot f_{\text{feedback}}(t) \, dt

Track Role: Smooth handoff between track segments (layers). Ensures the LLM doesn’t spin out when transitioning from wide curves (General) to tight turns (Relevant).

🔩 2. Inter-layer Coupling Dynamics

Formula:

CLi,Li+1=κ⋅(ΔTLi+1ΔTLi)C_{L_i, L_{i+1}} = \kappa \cdot \left( \frac{\Delta T_{L_{i+1}}}{\Delta T_{L_i}} \right)

Track Role: Determines how much “force” transfers from one part of the track to the next. Loose coupling = drifty handling; tight coupling = precision turns. The LLM relies on this to avoid disconnects in reasoning across layers.

⚠️ 3. Boundary Thresholds for Reorientation

Formula:

PBoundary(A→P,t)=Pthreshold(A→P)⋅fcontext(t)⋅faction(t)P_{\text{Boundary}}(A \rightarrow P, t) = P_{\text{threshold}}(A \rightarrow P) \cdot f_{\text{context}}(t) \cdot f_{\text{action}}(t)

Track Role: Critical alert system—tells the LLM when it must brake, swerve, or reroute. It detects urgency and misalignment between goals and incoming context.

🔧 4. Dynamic Feedback Loop

Formula:

ffeedback(t)=γ⋅∂PLi∂tf_{\text{feedback}}(t) = \gamma \cdot \frac{\partial P_{L_i}}{\partial t}

Track Role: Live telemetry. If a layer is losing grip (orientation instability), this mechanism ramps up correction—like real-time tire pressure adjustments from the pit crew.

🏆 5. Global Quadranym Transition

Formula:

TQuadranym(t)=∏i=1N[PLi(t)⋅ffeedback(t)]T_{\text{Quadranym}}(t) = \prod_{i=1}^{N} \left[ P_{L_i}(t) \cdot f_{\text{feedback}}(t) \right]

Track Role: Full-race performance metric. Only when all segments (layers) are handled coherently does the LLM finish the “lap” with meaning intact.

The Finish: A Race to the Future in AI

The race car and track metaphor vividly illustrates the dynamic interplay between the LLM and CAI systems. The LLM brings speed, adaptability, and raw computational power, while the CAI provides structure, coherence, and high-level guidance. Together, they form a hybrid system that’s both agile and stable, capable of navigating complex terrains with precision and grace.

By keeping modes and states distinct, the system ensures that the LLM can race ahead without losing its way, while the CAI’s structured track keeps the journey purposeful and on course. Whether in beta development or full-fledged deployment, this partnership is a winning formula, driving AI innovation to thrilling new heights.